Hacking the SEAMs:

Elevating Digital Autonomy and Agency for Humans

Richard S. Whitt[1]*

“Certainty hardens our minds against possibility.”

–Ellen Langer

The time has come to challenge the predominant paradigm of the World Wide Web. We need to replace controlling “SEAMs” with empowering “HAACS.”

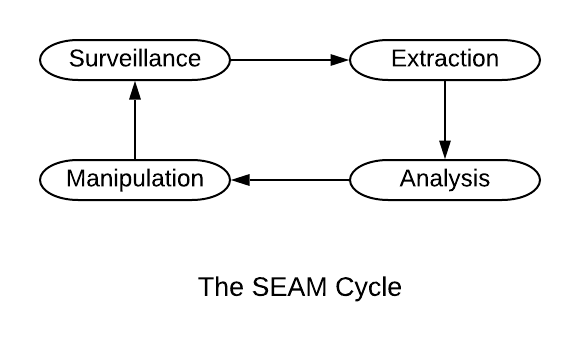

Over the past two decades, Web platform ecosystems have been employing the SEAMs paradigm—Surveil users, Extract data, Analyze for insights, and Manipulate for impact. The SEAMs paradigm is embedded as reinforcing feedback cycles in computational systems that mediate, and seek to control, aspects of human experience.

Fronting that SEAMs paradigm are unbalanced multisided platforms (treating patrons as mere users), Institutional AIs (consequential and inscrutable decision engines), and asymmetrical interfaces (one-way device screens, environmental scenes, and bureaucratic unseens). Operating behind all of this “cloudtech,” SEAMs-based feedback cycles continually import reams of personal data, and export concerted attempts to influence users.

While holding accountable these Web platform ecosystems is absolutely necessary work, by itself it does not engender true systems change. The approach suggested here is to challenge, and eventually replace, the underlying SEAMs paradigm itself with a far more human-centric one.

The proposed HAACS paradigm is premised on a different approach—human autonomy and agency, via computational systems. Rather than feed controlling tech systems, the HAACS paradigm supports new ecosystems that empower ordinary human beings. This means building institutions, governance frameworks, and technologies that:

Enhance and promote human autonomy (thought) and agency (action);

Conceptualize personal data as flows of digital lifestreams, managed by individuals and communities as stewards under commons and fiduciary law-based governance;

Introduce trustworthy entities, such as digital fiduciaries, to help manage individual and collective digital interactions;

Create Personal AIs, digital agents that represent the human being vis-à-vis Institutional AIs operated by corporate and governmental interests; and

Craft symmetrical interfaces that allow humans to directly engage with, and challenge, controlling computational systems.

Put more simply, these proposals translate into two compact terms: the human governance formula of D≥A (our digital rights should exceed, or at least equal, our analog rights), and the technology design principle of e2a (edge-to-all), as instantiated in various “edgetech” tools.

While the Age of Data remains in its infancy, time is growing short to confront its many underlying assumptions. The proposed new HAACS paradigm represents one such opportunity. Some real-world proposals in Appendix A would leverage multiple ecosystem-building opportunities simultaneously across technology, market, policy, and social environments.

Prologue and Overview: Founding A New Paradigm of Trust

Abiding in the long shadow of a global pandemic, with its pernicious economic and societal fallout, some of us hold a hard-earned opportunity to pause and consider where exactly we are standing. By all accounts, our many intertwined social systems are not serving most of us very well. Some of these systems are failing spectacularly before our eyes—whether falling prey to black swans,[2] or grey rhinos,[3] or simply the ordinary challenges of everyday life.

The likelier pathways leading us to the horizon are not promising: persistent risks to human health, economic vulnerabilities and disparities, systemic racial injustice, cultural clashes, political divides. And still in the offing, large-scale environmental disaster.

One common denominator seems to be that our fundamental freedoms as human beings—the thoughtful autonomy of our inner selves, and the impactful agency of our outer selves—are in real jeopardy. Too often our predominant social systems negate personal context, ignore mutual relationship, and undermine more inclusive perspectives. They constrain more than they liberate.

Even the coolness factor and convenience of our digital technologies mask subtle forms of (more or less) voluntary subjugation. Today, corporations and governments alike are subjecting each of us to a one-sided mix of online platforms, computational systems, and interfaces operating behind what can be thought of as our “screens, scenes, and unseens.” The purpose of all this impressive yet asymmetric “cloudtech” has become clearer with time. Those entities flourishing in the Web platform ecosystem have been perfecting what could be thought of as the SEAMs paradigm. Under this animating principle, the platforms Surveil people, Extract their personal data, Analyze that data for useful insights, and then circle back to Manipulate those same people in various ways. The end goals of this feedback cycle? Ageless ones of greater power, control, and money.

Holding these Web platforms and their ecosystems more accountable for their practices is a necessary objective, particularly in a post-COVID-19 landscape. Nonetheless, this paper focuses on the complementary, more aspirational goal of building novel ecosystems that elevate, rather than constrict, the autonomy and agency of ordinary human beings vis-à-vis digital technologies.

One source of guidance is Donatella Meadows, the great teacher of complexity theory. Meadows observes that there are many ways to alter existing systems so that they “produce more of what we want and less of that which is undesirable.”[4] She charts out a dozen different kinds of leverage points to intervene in floundering systems.[5] Examples include altering the balancing and reinforcing feedback loops (nos. 7 and 8), modifying information flows (no. 6), and creating new forms of self-organization (no. 4).[6]

However, Meadows notes, the single most effective approach is to directly challenge the existing paradigm—with its “great big unstated assumptions”—propping up a suboptimal system.[7] We can do so in two ways: relentlessly pointing out the anomalies and failures of that prevailing paradigm, while also working with active change agents from within the foundations of a new paradigm. As Meadows puts it, “we change paradigms by building a model of the system, which takes us outside the system and forces us to see it whole.”[8]

In light of our current shared societal crises, we can rethink and reshape how digital technologies can be designed to promote and even enhance our individual and collective humanity. As this paper explores, stakeholders have windows of opportunity to support a new Web paradigm: namely, human autonomy and agency via computational systems, or “HAACS.” This new paradigm seeks not just to reduce the harms to Web users emanating from the predominant Web platform ecosystems, but to actively promote the best interests of users as actual human beings.

There are two proposed ways of encapsulating the HAACS paradigm as more precise formulas to guide real action. For institutional governance, D≥A stands for the proposition that our rights as human beings in the digital world should exceed, or at least equal, our rights in the analog world.[9] For technology design, e2a is shorthand for “edge-to-all,” denoting that our technologies primarily should serve the interests of end users at the network’s edge.[10] Together these two formulas help tether higher level concepts to more concrete outcomes.

In the digital world, four key modes of mediation can help enable the HAACS paradigm, and push back against corresponding elements of the SEAMs paradigm: (1) the ways we experience the world: digital lifestreams; (2) the ways we gain and exert control: trustworthy fiduciaries; (3) the ways we virtualize ourselves: personal AIs, and (4) the ways we connect with each other: symmetrical interfaces.

This paper’s purpose is to shed light on various pathways forward to HAACS-founded futures. Part I lays out the case for shifting away from the current paradigm of unbalanced platforms, pervasive computational systems, asymmetric interfaces, and exploitative SEAMs feedback cycles. Part II establishes why holding incumbent online providers accountable is absolutely essential, but also incomplete. Part III describes what is at stake: human autonomy and agency, the “HAA” of the proposed new paradigm. Parts IV and V examine two particular elements of the “what” of a HAACS research agenda: digital lifestreams, and digital fiduciaries. Part VI focuses on two new agential technology tools: Personal AIs, and symmetrical interfaces. Finally, Part VII and Appendix A together supply the “how” in a detailed action plan to carry out interrelated tasks across multiple domains.

By necessity, this paper is just a sketch, a momentary snapshot of a vast landscape. Much remains to be added, subtracted, fought over, agreed to, and hopefully adopted in some actual places and times in the real world.[11] Crucially, one can reasonably push back against, and even reject, the seemingly dire assessment presented here—and yet still acknowledge that all of us can and should be expecting something appreciably better from the Web.

THE WHY: Refusing to Cede Ourselves to SEAMs of Control

For many people, human autonomy and agency—the liberty to live our lives with meaning and intentionality—are core principles of modern life. And yet, defining these seemingly foundational elements of the self can be challenging.[12] As we shall see in Part II, autonomy can be conceived of as our freedom of thought, while agency amounts to our freedom of action. Taken together, these attributes help define us as unique, purposive individuals, and members of chosen communities.

This section sketches out the multifaceted challenges before us: contending with the unbalanced power dynamics of multisided online platforms, the advent of pervasive all-powerful computational systems, their asymmetric screens, scenes, and unseens interfaces, and exploitative SEAMs feedback cycles. Collectively, one can envision these entities and elements as comprising a Web platforms ecosystem, employing a variety of cloudtech mechanisms, all operating under the SEAMs paradigm.

While platforms are the economic drivers and computational systems the technology instantiations, one must not forget that on all sides there are actual human beings behind each and every action. Web users tend to be drawn to platforms by siren songs of convenience and functionality. The platform ecosystem players—whether grouped together as corporations large and small, or government agencies global, to national, to local—have their own motivations. Typically, their institutional incentives amount to exercising power and control, for their own pecuniary or political or other ends.

While the human story is a timeless one, the economic and technological implements are unique in history. Collectively, these institutions now have the means, and the incentives, on an unprecedent scope and scale, to substitute their own motivations for our hard-won human intentionalities.[13] As Adam Greenfield puts it, “the deeper propositions presented to us” by contemporary digital technologies are that “everything in life is something to be mediated by networked processes of measurement, analysis, and control.”[14] For those who find that vision and those practices that support it problematic, the overarching “why” of this paper promotes one form of a concerted push back.

The Unbalanced Dynamics of Online Platforms

Over thousands of years, economic markets were primarily physical and local.[15] At certain times and in certain places, buyers and sellers connected through farmers’ markets and trade exchanges.[16] These organized gathering spots connected participants to engage in market transactions and other social interactions.

This connectivity function of the ancient Athenian Agora over time became its own standalone business model, deemed by many superior to traditional linear pipeline markets.[17] Over the past twenty years, the platform concept moved into the World Wide Web, and a particular version—the Web platform, and its attendant ecosystem of data brokers, advertisers, marketers, and others—quickly became the prevalent online commercial model.[18]

All platforms create value through matching different groups of people to transact. As Shoshana Zuboff and others have detailed,[19] the version of Web platform ecosystem that dominates today is premised on several sets of players. The User occupies one end, the Platform/Provider of the content/transactions/services the middle, and the Brokers (including advertisers and marketers) the other end.[20] The configuration is simple enough: the Platform/Provider supplies offerings at little to no upfront cost to the User, while data and information gathered about the User is shared with the Brokers, who use such information to target their money-seeking messages to the User.[21] The Platform/Provider in turn takes a financial cut of these transactions.[22]

It is increasingly common to note that in this Web platform ecosystem model, the User is the “object” of the transactions, while the Broker is the true customer and client of the Platform/Provider.[23] Another way of describing it is an unbalanced platform model, where one side obtains decided advantages of power and control over the other.[24] While Web users do receive benefits, in the form of “free” goods and services, they are paying through the extraction and analysis of their personal information—and the subsequent influencing of their aspirations and behaviors by the Platform/Providers and Brokers.[25] However, even today, Web users often do not fully appreciate their decidedly secondary status in this modern day version of a platform.

While network effects and other economic factors make the current Web platform ecosystem model seem all but inevitable, and even irreplaceable,[26] nothing about this particular configuration of players is deterministic. Only two decades have passed since the model first gained traction in the commercial Web.[27] Countless other, more balanced options are available to be explored, where the end user is a true subject of the relationship. Nonetheless, it is fair to say that today’s Web is premised on this unbalanced approach.

The Pervasive Role of Computational Systems

With ready access to financial resources, technical expertise, and our eyeballs and wallets, Web platforms and players in their ecosystems are busy deploying advanced technologies that together comprise vast computational systems. As we shall see, all this tech occupies increasingly significant mediation roles in the lives of ordinary people.

Computational systems are comprised of nested physical and virtual components.[28] These systems combine various overlays (Web portals, social media offerings, mobile applications) and underlays (network infrastructure, cloud resources, personal devices, and environmental sensors).[29] Considerable quantities of data, derived from users’ fixed and mobile online (and increasingly offline) activities, is perceived as supplying the virtual fuel.[30] At the intelligent core of these systems is the computational element itself—what we shall be calling Institutional AIs.

The largest Web platforms—Google, Facebook, Apple, Microsoft, but also Tencent, Alibaba, and Baidu—have woven themselves highly lucrative ecosystems.[31] Importantly, these immensely powerful cloudtech constructs belong to, and answer to, only a relative few in society. The situation is becoming even more challenging as new generations of “intelligent” devices and applications are introduced into our physical environment. These advances include the Internet of Things (IoT), augmented reality (AR), biometric sensors, distributed ledgers/blockchain, and quantum computing, culminating for some in the enticing vision of the “smart world.”[32]

In short, some combination of digital flows, plus physical interfaces, plus virtual computation, plus human decision-making, is what a computational system does. The next two sections will touch on the tightly-controlled user interfaces and the data-based feedback cycles, that together help import our personal data, and export their influences.

The Asymmetry of Screens, Scenes, and Unseens

Computational systems require interfaces. One can think of these as their eyes, ears, and voices, their sensory subsystems. These interfaces are the gateways through which Platforms interact with Users.

Through institutional control over these interfaces, human data and content typically flows in one direction—as we shall see in the “S+E+A” control points of the SEAMs paradigm and its animating feedback cycles. In the other direction flows the shaping influences—the “M” of manipulation in SEAMs. The one-sidedness in transparency, information flow, and control makes the interface an asymmetrical one.

Every day we interact with computational systems via three kinds of interface, envisioned here as digital “screens, scenes, and unseens.”[33] Online screens lead us to the search engines and social media platforms and countless other Web portals in our lives.[34] The Institutional AIs in the computational systems behind them render recommendation engines that guide us to places to shop, or videos to watch, or news content to read.[35] More ominously, these systems (with their user engagement imperative) tend to prioritize the delivery of “fake news,” extremist videos, and dubious advertising.[36]

Environmental scenes are the “smart” devices—cameras, speakers, microphones, sensors, beacons, actuators—scattered throughout our homes, offices, streets, and neighborhoods. These computational systems gather from these interfaces a mix of personal (human) and environmental (rest of world) data.[37] The Ring doorbell placed by your neighbor across the street is but one example.

Bureaucratic unseens are hidden behind the walls of governments and companies. These computational systems render judgments about our basic necessities, and personal interests.[38] These decisions can include hugely life-altering situations, such as who gets a job or who gets fired, who is granted or denied a loan, who receives what form of healthcare, and who warrants a prison sentence.[39]

Interestingly, the progression of interface technologies tends to evolve from more to less visible, or even hidden, forms. What once was an obvious part of the user’s interactions with a system, gradually becomes embedded in local environments and even vanishes altogether. As computer scientist Mark Weiser put it nearly 30 years ago, “the most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it.”[40]

Human engagement with these receding interfaces also becomes less substantive, as part of a “deep tension between convenience and autonomy.”[41] From typing on keyboards, to swiping on screens, to voicing word commands, to the implied acceptance of walking through an IoT-embedded space—the interface context shapes the mode and manner of the interaction. In systems parlance, the feedback loops become more attenuated, or disappear altogether.[42] Traditional concepts like notice and choice can become far less meaningful in these settings. The tradeoff for humans is exchanging control for more simplicity and ease.

In these contexts, technology moves from being a tool for those many sitting at the edge, to becoming its own agent of the underlying cloudtech systems. Interfaces can remove friction, even as they also can foreclose thoughtful engagement. While this progression in itself may well bring benefits, it also renders more muddled the motivations of the computational systems operating quietly behind the screens, scenes, and unseens.

The Exploitations of SEAMs Feedback Cycles

Finally, computational systems require fuel—steady streams of data that in turn render compensation to players in the Web platforms ecosystem. At the platform’s direction, with its pecuniary motivations, the SEAMs cycle has become the “action verb” of the computational system. Per Stafford Beer, “POSIWID,” or “the purpose of a system is what it does.”[43] The SEAMs paradigm is instantiated in exploitative feedback cycles.[44]

SEAMs cycles harness four interlocking control points of the computational action verb. “S” is for surveilling, via devices in the end user’s physical environment.[45] “E” is for extracting the personal and environmental data encased as digital flows.[46] “A” is for analyzing, using advanced algorithmic systems to turn bits of data into inference and information.[47] And “M” is for manipulating, influencing the user’s outward behaviors by altering how she thinks and feels.[48]

The point of this elaborate set of systems is not in the “SEA” control points, which is troubling enough. It is the “M” of manipulation. By focusing primarily on the user data flowing in one direction, we easily can overlook the direction of influence back at the user. These cloudtech systems both import data and export influence.

Figure 1

Figure 1

Some may find the nomenclature of manipulation unduly harsh. By definition, to manipulate means to manage or influence skillfully; it also means to control someone or something to your own advantage.[49] Both meanings match what the SEAMs feedback cycles are actually producing.

The institutional imperative is straightforward: create as much influence over users as you can, so you can make as much money as possible. The predominant Web platform ecosystems would forego all the considerable expense and effort of investing in and deploying the “SEA” elements of their computational systems if they were not yielding anything but hugely successful “M” outcomes.

To be clear, those with economic and political power have always wielded technological tools to exploit, and even manipulate, the consumer, the citizen, the human. The history of more benign examples of influence (advertising and marketing), and more pernicious ones (propaganda), is a long one.[50] In consumerist societies, advertising and marketing practices have been used to persuade people to buy goods and services. These practices have morphed over time with technology advances: from newspapers, to radio, to TV—and now to the Web.[51]

What has changed is the sheer power of these new 21st Century ecosystems. “The digital revolution has radically transformed the power of marketing.”[52] The combined reach of the “SEA” control points—the near-ubiquitous devices, the quality and quantity of data, the advanced AI—feeds directly into a greatly-empowered “M” element. There is profound human psychology operating as well in these design decisions. What Zuboff calls the “shadow text” gleaned from human experience helps platforms in turn shape the “public text” of information and connection.[53] Algorithmic amplification of attention-grabbing content further adds to the creation of an online reality.[54] As the feedback cycle progresses, the entire construct constantly evolves, to incorporate ever more subtle nudges, cues, “dark patterns,”[55] and other innovations.

Importantly, SEAMs cycles performed by computational systems, under the direction and incentives of Web platform ecosystems, should not obscure the fact that all of this impressive cloudtech functionality still is being planned and run by human beings. These nested technology and economic systems are only the more visible instantiations of the human drive for control and profit.[56]

Shoshana Zuboff’s in-depth empirical analysis has shed much-needed light on the people behind the algorithms, and their desire to manipulate end users’ behaviors. Senior software engineers and businesspeople at major platform companies confided in her that “the new power is action,” which means “ubiquitous intervention, action, and control.”[57] These Silicon Valley denizens use the term “actuation” to describe this new capability to alter one’s actions; Zuboff labels it “engineered behavioral modification.”[58] She details three different approaches aimed at modifying user behavior: tuning, herding, and conditioning.[59] “Tuning” is the subliminal cues and subtle nudges of “choice architecture.”[60] “Herding” is remotely orchestrating the user’s immediate environment.[61] “Conditioning” reinforces user behaviors, via rewards and recognition.[62]

In all three cases, the end goal is the same: to get a person to do something they otherwise would not do, or, as Zuboff puts it, to “make them dance.”[63] For example, in the case of content delivery to the user, the platform makes more money on content that drives engagement, which can entail dis- or misinformation and extremist content.[64] By programming the system to promote—and amplify—certain kinds of content, the platform is also “programming” the user to accept and interact with that content.

On the selling side, platform operators and their ecosystem partners also can utilize detailed information about the user to extract the maximum amount of money she willingly will part with for a service or product.[65] To the extent this first-order price discrimination technique is employed, it marks a clear case of using extensive knowledge about us, against us.

Importantly, much of this cloudtech activity happens outside our conscious view. Per Zuboff, “there is no autonomous judgment without awareness.”[66] Frischmann and Selinger make a similar point that what they call “techno-social engineering” can shape our interactions, via programming, conditioning, and control engineered by others, often without our awareness or consent.[67]

Moreover, the sense of “faux” agency provided by robust-seeming interfaces leaves many people to believe they remain in charge of their online interactions. When one is unaware of the manipulation, “our unfreedom is most dangerous when it is experienced as the very medium of our freedom.”[68]

If Williams James was correct—that one’s experience is what one agrees to attend to[69]—it would seem to be a rallying cry for the “intention economy” of the twentieth century. Perhaps the converse becomes more apt for the “influence economy” of the twenty-first century—now, whether I agree to it or not, “I am what attends to me.”

One cannot plausibly hope to retain much of one’s independence of thought and of action in the face of such relentless, pervasive, and super-intelligent SEAMs cycles. Nonetheless, today’s technology and economic and political systems are what we have to work with. So those are the best tools available with which to push back.

THE CHALLENGE: Holding the Platforms Accountable Is Necessary—Yet Insufficient

To those who find the above picture troubling, there are two fundamental options. One is to take steps to create greater degrees of accountability for the actions of players in the Web platform ecosystems. The other is to create entirely different ecosystems, based on an entirely different ethos, and overarching paradigm. While this paper calls for pressing forward on both fronts, it will focus primarily on the latter. In brief, as this Part will briefly show, holding the platforms accountable for their actions is necessary, yet insufficient work.

The prevailing policy approaches to countering the negative impacts from Web platform ecosystems amount to minimizing their harmful consequences. Making these systems more accountable to the rest of us, in turn, limits their unilateral reach and authority. This “computational accountability” mode modifies the existing practices of the Web platform ecosystem—including large platform companies, government agencies, and data brokers—while still leaving intact the animating SEAMs paradigm.

Representative steps in the public policy realm to improve computational accountability include: increasing government oversight of large platforms; policing and punishing bad behavior; creating greater transparency to benefit users; enabling more robust data portability between platforms; improving corporate protection of personal data; reducing algorithmic bias in corporate and government bodies; and introducing ethics training in computer science.

Each of these actions is hugely important and necessary to make these computational systems, and their institutional masters, more answerable to the rest of us. As one example, Europe’s General Data Protection Regulation (GDPR) “is a notable achievement in furthering the cause of [protecting] European citizens’ personal data.”[70] Nonetheless, even taken together, such accountability measures may not be enough to significantly alter power imbalances in the current digital landscape.

In each instance, the computational systems themselves still remain largely under the direct control of the underlying platforms and their ecosystem players, with their enormous financial, political, and “network effects” advantages.[71] Platforms’ ability to take on and absorb government accountability mandates may be unprecedented in modern history. Relatedly, larger players often can gain the advantage of “regulatory lock-in,” or the ability to influence, or evade, government rules in ways that smaller players cannot readily duplicate.[72] This threat has been well articulated with regard to the large platforms’ ability to comply (or approximate compliance) with GDPR.[73]

Moreover, regulatory solutions based on making incumbent players more accountable typically rely primarily on behavioral remedies—what could be considered “thou shall not” or “thou shall” injunctions.[74] Such regulations can be difficult to define, adopt, implement, and enforce.[75] Such behavioral remedies also tend to leave existing power asymmetries in place.[76] Users’ current struggles with “consent fatigue” from cookie consent notifications is only symptomatic.[77]

At bottom, an exclusive focus on accountability may well end up conceding too much to the status quo. As Catherine D’Ignazio and Lauren F. Klein observe in their book Data Feminism, accountability measures by themselves can amount to “a tiny Band-Aid for a much larger problem,” [78] and even have the unintended consequence of entrenching existing power.[79] Core aspects of the prevailing SEAMs paradigm, and its enacting business models, may well remain intact. Even fighting to grant users the ability to monetize their own personal information can be seen as accepting the reductivist Silicon Valley credo that “personal data is the new oil.”[80]

Some Limits of the Privacy Concept

The concept of privacy is an important tool for protecting the self. Still, the SEAMs feedback cycles are one reason why privacy, as commonly understood, is insufficient on its own to adequately protect ordinary humans from unwanted incursions.

To the average person on the street, the concept of one’s privacy as an individual has been sold as an irrelevant luxury. Scott McNealy of Sun infamously remarked back in 2003 that, “you have no privacy—get over it.”[81] While at Google, Eric Schmidt posited that, “if you have something that you don’t want anyone to know, maybe you shouldn’t be doing it in the first place.”[82] These types of comments suggest that those within the Web platform ecosystem hope to instill in each of us a sense of resignation, if not outright shame, to counter the natural desire to protect one’s personal and private self from intrusive eyes.

Not surprisingly, then, two common refrains one hears regarding platform privacy are that “they already have all my data anyway,” and “I have nothing to hide.” In both cases, however, the person likely presumes that the platforms are utilizing a linear mechanism to acquire pieces of their daily life—their name, their credit card numbers, an unflattering photo, their favorite beer—to create a profile from which the platform will simply try to sell them some goods and services.

As we have seen, however, this folk understanding understates the threat. The sheer shaping function of the dynamic, feedback loops-based “M” element in the SEAMs cycles is not well understood. Players in the Web platform ecosystem do not (only) want to discover something you might want, in order to sell it to you. The goal of the purveyors of SEAMs cycles is to export their influence, conscious or otherwise.[83] They want to make you do certain things—buy goods and services, provide content, take a stance on a controversial issue, cast a vote for a certain political candidate—that they want you to do.[84] Their end game is to actually manipulate and alter one’s thoughts (autonomy) and behaviors (agency), because it makes for better business.[85] Using privacy policies as a shield to defend one’s sphere of intimacy and vulnerability, would not appear to be much of a match for determined SEAMs cycles-based manipulation.

Further, the concept of privacy typically extends only to what has been thought of as personal data. Other forms of data—from shared, to collective, to non-personal—seem ill-suited to individual privacy.[86] Yet the SEAMs cycles churn through all types of data, with potentially pernicious impacts on human society. These impacts include the externalities stemming from entities exerting control over such data in ways that may not harm specific individuals, but still harm society in general.[87]

In short, then, reducing the harmful actions of an already-powerful status quo is vital work, but, by definition, produces only partial societal gains. The point is not to abandon these efforts, but to supplement them. These accountability-type policies then offer a much-needed, but slightly ill-fitting, shield. The times may be calling as well for introducing a sturdy sword. A complementary paradigm, of enhancing real-world human autonomy and agency, would more directly challenge that same cloudtech-enabled status quo.

THE PROPOSED WHAT: Enabling Human Autonomy and Agency

If public policies premised on platform accountability are not sufficient to fully protect us from harm—let alone allow us to promote our own individual and collective interests—what other options are available? If with accountability regimes the rallying cry has been “Do Not Track Me,” the more urgent injunction offered here is “Do Not Hack Me,” or even “Do Not Attack Me.” The remaining part of this paper suggests practices and actions founded on what is called the HAACS paradigm.[88] The premise is that I should have available to me the entities and technologies that will allow me to “hack back” at the SEAMs paradigm, and its benefactors.

This section will briefly explore the “HAA” element of the paradigm—autonomy and agency for ordinary human beings. The point of this all-too-brief exercise is to establish the considerable stakes involved when encountering the full force of the early twenty-first century’s Web platform ecosystems. Part IV then will dig into our (pre)conceptions about data. Part V will unpack how digital fiduciaries can bolster human autonomy and agency in the digital world. Part VI then discusses two discrete edgetech tools—Personal AIs and symmetrical interfaces—that can channel these priorities as supporting “CS” elements. Part VII and Appendix A provide a detailed, provisional action plan, the “how” for bringing into reality each of these five related elements.

As noted above, economic and technology systems are rooted in basic human behaviors. No Web platform company or computational system, no asymmetric interfaces or SEAMs feedback cycles, have any meaning outside the purview of the human motivations sustaining them. Relatedly, we cannot hope to push back against the SEAMs paradigm and its supporters, without a firm grasp on what in fact we are fighting for. The section below proposes to start with the human in the middle, and then work forward.

Importantly, the HAACS paradigm is an unfinished work. It is more a promising research agenda, than a complete and final concept unto itself. At this early stage, HAACS represents an adaptable stance, better evolved and fleshed out by many people, in organic ways, both bottom-up and top-down. What follows here, and in the remaining sections, should be taken as an open invitation to engage in the conversation.

Why should we begin here? Because any fruitful conversations about technology and economic and social systems are best grounded in the humans behind them all.

For many thousands of years, philosophers and others have been debating the finer points of whether and how we human beings are truly free. What has emerged in some quarters is what amounts to a rough consensus: we are neither totally free beings, nor are we totally determined automatons. Between those two poles remains a vast area of contention.

With a nod towards the experts, I will first appropriate two often-employed terms of autonomy and agency, and attempt to give them a bit of new life. The guides here will be drawn from cross-disciplines, such as phenomenology (in philosophy),[89] the 4E school of cognition for our embodied, embedded, enactive, and extended selves (in cognitive science),[90] and self-determination theory (SDT) (in psychology).[91]

At the outset, the literature suggests that autonomy and agency can be viewed as two separable attributes of the human self.[92] Autonomy is self-direction, self-determination, and self-governance.[93] It amounts to the freedom to decide who one wants to be, in one’s thoughts and intentions. By way of contrast, agency is behavior, interaction, the capacity to take action.[94] It amounts to the freedom to intervene and act in the world. At the extremes, a child’s simple robot can be said to have some agency—without much autonomy—while a prisoner in a dungeon has some autonomy, without much agency.

The two concepts of autonomy and agency amount to deciding to do something, versus being able to do it. The freedom to create and determine your own motivations, versus the freedom to act upon them.[95] They can be considered two flavors of human liberty, and perhaps two fundamental ways to define the human self and her unique identity.[96]

At the same time, thought and action form part of a continuum of human beings existing in the world. The two concepts are closely intertwined, and can be mutually reinforcing, or degrading. They also are matters of degree, across a blend of the (supposed) inner and the outer worlds. To capture this notion of continuity and blending, from here forward we will refer to a singular process of exercising “human autonomy/agency.”

For example, one purchases a new vehicle. What steps were involved in that decision-making process? Likely at the outset some vague, even unconscious or subconscious suppositions about the necessity of a car in modern society, emotional desires to own a certain “sexy” brand, and mental calculation of a cost/benefit analysis. At some point the musings become an intention, marked by certain outward behaviors (checking online for specific offers) and actions (obtaining a bank loan), that lead to the parking lot outside a local automotive dealer. Wherever autonomy ends and agency begins, a robust mix results in a brand-new hybrid vehicle parked in the driveway.

Human autonomy/agency also can be explored through the prism of two kinds of liberty. Freedom “from” something is considered the negative form–for example, in autonomy as the freedom from outside coercion and manipulation.[97] Freedom “for” something is deemed the positive form–for example, in autonomy as the freedom to develop one’s own thoughts and actions in creative ways.[98]

Both forms of freedom are not absolutes, but subject to a variety of internal constraints (urges, needs, genes, personality) and external constraints (environment, society, nature).[99] These constraints present differently in each culture, and each individual. The key is whether and to what extent humans have some element of conscious sway over these “heteronomous” influences. Within the self determination theory (SDT) school of human psychology, for example, autonomy “is not defined by the absence of external influences, but by one’s assent to such inputs.”[100]

There are also important external bounds that communities can and do place on the exercise of human agency. Traffic lights, restaurant dress codes, not yelling “fire” in a crowded theatre—examples abound. More fundamentally, societies continually deal with the challenging tensions between freedom and justice. Human freedom is relativistic. As Camus put it, “[a]bsolute freedom is the right of the strongest to dominate,” while “[a]bsolute justice is achieved by the suppression of all contradiction: therefore it destroys freedom.”[101] These tensions are best worked out in inclusive, democratic processes.[102]

While constraints on freedom present to some as significant limitations, they do not foreclose all degrees of independence. Indeed, in important ways, our constraints are necessary to our ability to experience our freedoms. Taylor notes that “the only viable freedom is not freedom from constraints but the freedom to operate effectively within them…. Constraints provide the parameters within which thinking [autonomy] and acting [agency] might occur.”[103] Further, as Unger has argued, we are always more than what our circumstances otherwise would dictate.[104] And the process is ceaseless. “The complex processual nature of the self—always changing and developing, always reflecting on and transforming itself, [is] never complete.”[105]

Finally, autonomy/agency is not limited to certain cultures, or certain times. While the ways these attributes of self can be expressed are innumerable and subject to different kinds of outside influences, empirical evidence demonstrates that these still constitute universal and core human capabilities for thinking, and being, and acting in one’s world.[106]

As Part B will explore, human autonomy/agency does not exist in a vacuum. By nature we are mediating creatures, constantly interacting with, and filtering, the world through our sensory and conceptual systems. That mediating role takes on even greater importance with the advance of digital technologies, where technical interfaces and other tools are taking over more of the filtering work of our biology-based mediation systems.

Feedback is what allows information to become action, provides “the bones of our relationship with the world around us.”[107] As embodied beings, we exist within webs of mediation shaped by our social interactions.[108] Mediation processes are a part of our organic constitution, as our bodies and brains and minds constantly filter in the meaning, and filter out the meaningless.[109] As social beings, we have a long history of delegating our mediations to third parties[110]—news sources, government bodies, religious institutions, trusted friends, and the like.

The point is not somehow to avoid all forms of mediation (an impossible task), but rather to understand better how they operate, and then take a hand in controlling the flows and interfaces that make up the mediation processes. Using the noise/signal duality from information theory,[111] the objective is to achieve human meaning, by elevating the signal while depressing the noise.[112] Better yet, one can align trustworthy outside mediators to help compensate for our cognitive biases and shortcomings, rather than prey on them as exploitable weaknesses.

A useful image for human mediation could be that of an enclosing bubble, with semi-permeable barriers. By affirmatively pushing to expand the bubble outward, one can accept and bring into oneself certain aspects of the world. A new friend, a challenging book, an educational documentary, an inspiring church service. In contrast, by deliberately pulling back to contract the bubble inward, one rejects and moves away from unwanted aspects of the world. When one is in charge of this process, one can control to some degree one’s autonomy of thought and agency of action. When others are taking more such control over these continual self-expansions and -contraction processes, the actual human at the center is less in charge.[113]

When trust is embedded in a mediating relationship, one can engage in processes of opening up to a broader range of outside influences.[114] This type of intention-driven openness contrasts with enclosure by default (through one’s fear or anger), as well as forms of “faux” openness defined by others that end up acting against one’s better interests.

Unger has written cogently about this lifelong dialectic process, as “being in the world, without being of it.”[115] He urges us to overcome the duality between exposure and sterility, or as he puts it, “engagement without surrender.”[116] To the point, “It is only by connection with others that we enhance the sentiment of being and developing a self. That all such connections also threaten us with loss of individual distinction and freedom is the contradiction inscribed in our being.”[117] In brief, we are “[c]ontext-shaped but also context-transcending beings. . .”[118]

From fire, to the printing press, to the digital computer, humans have employed technologies to modify and control the external environment, as well as augment human capabilities. Technology is embedded in, and can help open up and enhance, our sensory and other bodily/conceptual/social systems. If the human mind truly is an extended and embodied locus of processes,[119] then technologies reside within that mediated zone.

Technology has become a consistent mediator of its own in our lives. This “mediatization” came in waves: from the mechanical, to the electrical, and now the digital.[120] As with other human forms of mediation, there is little to be gained by closing ourselves off completely from technology as mediator. The point is not to shun it, but to control it. As Verbeek puts it, “Human freedom cannot be saved by shying away from technological mediations, but only by developing free relations to them, dealing in a responsible way with the inevitable mediating roles of technologies in our lives.” [121]

Crucially, technology is not some deterministic, inevitable force or trend. Nor is it some value-neutral tool. Technologies serve as proxies of the persons or entities wielding them. Choices made by designers—what functions to include or exclude, what interfaces are defined or not, what protocols are open or closed—have a profound effect on how the technology is actually used.[122]

This certainly has been the case with the Internet and its overt design principles.[123] To the countless software engineers developing the Internet over several decades, its key attributes amount to the goal of connectivity (the why), the structure of layering (the what), the tool of the agnostic Internet Protocol (the how), and the end-based location of function (the where).[124] In each case, design was founded on specific engineering principles grounded in human values. In particular, the end-to-end (e2e) principle describes a preference for network functions to reside at the ends of the network, rather than in the core.[125] This design attribute reflects a deliberate value statement that disfavors certain functions—such as packet prioritization from the lower network layers—while engendering greater ease of use and openness for the upper application layers.[126]

Other design choice examples are premised on simplistic conceptions of transparency that rely on the outmoded “conduit model” of communications. In these cases, information is assumed to simply move from sender to receiver without any filters or translation processes.[127] Human beings and technologies generally do not work in that manner—unless (like the Internet) they are designed that way.[128]

What Couldry and Hepp call the “mediated construction of reality” is the insight that “the social is constructed from, and through, technologically mediated processes and infrastructures of communications.”[129] Importantly, these technology processes of mediation are necessary outcomes of economic and political forces.[130] Under today’s mediation conditions, they find, “the social construction of reality has become implicated in a deep tension between convenience and autonomy, between force and our need for mutual recognition, that we do not yet know how to resolve.”[131]

The deepest tension, Couldry and Hepp conclude, is between the necessary openness of social life, where we develop our lives autonomously, and “the motivated (and in its own domain, perfectly reasonable) enclosure for commercial ends of the spaces where social life is today being conducted.”[132] As we have seen with the SEAM feedback cycles, players in platform ecosystems employ the Web’s technologies as a means to harness mediation processes that can influence users, directly or indirectly.

One challenge comes where technology’s many mediations harbor opaque influences. Web ecosystem players mediate in at least two ways. First, via their proffered forms of interface, these entities can provide the outward illusion of autonomy and agency.[133] Second, by creating repetition that fosters a sense of familiarity, these entities can train our behaviors to fit the intentions of the underlying systems.[134]

So, humans filter the world through a blend of biology and technology-based interfaces. While most of us are born with a healthy mix of natural mediation tools, we also design and create new technologies to extend and enhance the reach of those tools. As Part C will explore, these mediation tools take on added importance as humans use them to derive personal meaning from the daily flow of experience. Our self/world mediations help constitute us as autonomous and agential beings in the world.

Mediation tools do not exist in a vacuum. As filtering agents, our personal identities are constantly changing and evolving blends of the autonomous and agential.[135] For many, this means that our lives constantly flow with meaning. “[H]uman experience is characterized by our embedding in webs of meaning arising from our participation in systems of many sorts. . .”[136] Much of the “meaning” may arise, not only from conceptual thought, but as well from the raw feelings derived from our ancestral brains, based on genetic inheritance, sensory inputs, internal bodily inputs, and action dynamics.[137]

Our lived reality is a unique bundle of experiences, interactions, and relationships—a fluid mix of the past (memories), the present (moments), and the future (intentionalities). Hopes, fears, and aspirations are woven together with the experiences of others—family, friends, strangers, communities of interest. This feeling of autonomy and self-determination, as represented in the action of agency, “is what makes us most fully human and thus most able to lead deeply satisfying lives—lives that are meaningful and constructive—perhaps the only lives that are worth living.”[138] My own term for this human experience of the (potentially) meaningful flow of space and time is the individual’s lifestreams.

In phenomenology and the 4E school of cognition, concepts like self and world, the inner and the outer, inhabit more a continuum than a duality.[139] Relational boundaries have been called “the space of the self … the open-ended space in which we continually monitor and transform ourselves over time.”[140] This circle of inner and outer spaces never-endingly turns in on itself, as “a materially grounded domain of possibility that the self has as its horizon of action and imagination.”[141] As Brincker says:

As perspectivally situated agents, we are able to fluidly shift our framework of action judgment and act with constantly changing outlooks depending on the needs and opportunities we perceive in ourselves and our near surroundings in the broader world…. [W]e continuously co-construct and shape our environments and ourselves as agents…. [142]

If we follow the 4E school of cognition, then the role of natural and technological mediation processes is even more important. The scope of human cognition is extracranial, constituted by bodily processes (embodied), and dependent on environmental affordances (embedded and extended).[143] If the self and her environment essentially create each other, whether and how other people and entities seek to control those processes becomes paramount.

Importantly, the twinned human autonomy/agency conception should not suggest a form of isolation or solipsism. The stand-alone, solitary, “cast-iron,” and completely independent individual is simply an unsupported philosophical relic of the past.[144] In fact, there are both individual and collective forms of autonomy and agency. In SDT psychology, “[c]ollective autonomy is experienced by processes of endorsement and decisive identifications.”[145] Our relationships in the world make us social creatures; our connections to the world make us cultural creatures. Our mode of meaning is more than individualistic; it is collective. Ultimately, human autonomy/agency embraces the liberty to define and enact one’s own semi-permeable boundaries between self and rest of world.

As the brief introduction above shows, concepts of human autonomy and agency, mediation and meaning, are broad and deep. Yet even this cursory treatment shows how our technology systems can be engineered to further, or constrict, the essence of our shared humanity.

Below, Parts IV, V, and VI shift to focusing on four key loci where society, technology, and the twinned human autonomy/agency elements intersect. Part IV delves into conceiving of “data” as digital lifestreams, which opens up productive new conceptual spaces for governing and managing these experiential flows of meaning. Part V then turns to the role of trustworthy fiduciaries in promoting our digital life support systems—and fulfilling the human governance formula of D≥A. Finally, Part VI explains how the advanced edgetech tools of Personal AIs and symmetrical interfaces together can transform digital spaces from closed windows to open doors—furthering the proposed design principle of edge-to-all, or e2a.

While each element is important in its own right, combining them in a true ecosystem-building approach best harnesses their impact. Indeed, employing systems-informed approaches will maximize the relative gains from the interwoven elements.[146]

THE PROPOSED WHAT: HAACS and Digital Lifestreams

Part III examined the extraordinary richness of the human experience, including the human and technological systems of mediation that help us create meaning and purpose in our lives. The notion of lifestreams was introduced above as a way of capturing the essence of living in a world that provides opportunities to express our autonomy of thought and agency of action.

This Part combines the lifestreams concept with the digital environment presented by advanced computational systems. Section A explains how the thing we have come to call “data” is an ill-fitting shorthand that fails to capture the varied, social, contextual, and situational aspects of our digital lifestreams. This Section briefly considers alternative data narratives to the prevailing extractive metaphors. Section B applies these insights to conversations about economic doctrine, where data is better perceived as a collective good resource. In that same section, fiduciary law will be posited as a superior legal governance mechanism that provides solid foundations for human and constitutional rights regimes in digital spaces.

What exactly is data? This section proposes some alternative conceptions to the prevailing, commodity-based assumptions foisted by the platform companies—and even many of their critics.

Perhaps few words in the 21st Century have been so widely employed, debated, misunderstood, and abused than “data.”[147] While its provenance extends back several hundred years—well before the annals of modern computer science—data from the beginning has been a rhetorical concept, deriving much of its meaning from the times.[148] Indeed, for some 200 years, the notional West and global North have been building a world based on the collection and analysis of data.[149] Today, the thing we call data increasingly is being defined for society by corporations and governments with their own stakes in the outcome.[150] Such definitional exercises tend to obscure the reality of the Web’s SEAMs feedback cycles and the very human motivations that drive them.

The concept of digital lifestreams described below seeks to take back some of that definitional authority. That alternative conceptualization begins in section 1 with a grounding in the more humanistic term described above as lifestreams, which embraces experiential flows about myself, and my relationships and interests in the world. The “digital” is added to represent the technology-based encasement of those flows. Section 2 briefly summarizes the follow-on possibilities for new data analogies and narratives. Section B then addresses some of the governance implications from the economic, management, and legal perspectives.

Technically speaking, data is a string of binary digits (1s and 0s) intended to connote a piece of reality.[151] Data is a well-known term from computer science, often conceived as something for entities to manage in an information lifecycle.[152] Over time, concepts of data have been imported into the real-world of human beings. Each of our lives now is being represented in digital code, by platform companies, data brokers, government agencies, and many others.[153] Three foundational points warrant initial emphasis.

First, while computers are digital devices, human beings and the environments we inhabit are analog.[154] By definition, that means the world produces an endless series of signals representing continuously variable physical quantities.[155]

Often we forget that the digital language of ones and zeroes is merely the encoding—a translation, a rendering, an encasement—and not the reality it seeks to portray. We can experience first-hand how a live musical performance exceeds the highest fidelity Blu Ray disc—let alone the poorly-sampled streaming versions most of us are content to enjoy as is. So, one plausible definition of data is the digital encoding of some selected aspect of reality.

Many aspects of our analog life can be rendered in “digitalese,” from the somatic (physical), to the interior (thoughts and feelings), to the exterior (expressions and behaviors), to the conventional identifiers (social security numbers).[156] Each of these is a certain form of data intended to denote aspects of the individual’s relational self. Importantly, this means that the very nature of “data” eludes the monolithic. Indeed:

The process of converting life experience into data always necessarily entails a reduction of that experience – along with the historical and conceptual burdens of the term…. Before there are data, there are people… And there are patterns that cannot be represented – or addressed – by data alone.[157]

To be clear, the formatting shift from analog to digital has brought enormous, tangible benefits to our world. The challenge is to successfully translate life’s ebbs and flows into coherent signals that successfully yield useful insights into our humanity.[158] At this early juncture, it is far from clear that the black-and-white conceptualizations of the binary can ever hope to match multihued existence. The ever-present incompleteness and inaccuracy of data may be ubiquitous.[159] And the qualified self may well elude the best encapsulations of the quantified self.

We are more than just the data that others have been gathering about us.

Second, the “production” of data is inherently asymmetrical, because it is accomplished for the purposes of private or governmental bodies that use the data.[160] The authors of Data Feminism have made plain “the close relationship between data and power.”[161] Further, “the primary drivers of data processes as forms of social knowledge are institutions external to the social interactions in question.”[162] Utilizing SEAMs cycles, commercial platform companies seek to build quantified constructs meant to represent each of us. Or, at most, my intrinsic value to them as a consumer of stuff. To them, data is a form of property, a resource, a line item on balance sheets—used to infer and know and shape, to the depths of our autonomy, and the span of our “perceptible agency.”[163] To some, data may even represent the final frontier of the marketplace, the ultimate opportunity to convert to financial gain seemingly endless quantities of the world’s digitized stuff.

This pecuniary conception of data supports the narrow and deep commodification of the quantified self as a mere user or consumer of goods and services. Narrow, because the data lifecycle is answerable primarily to the singular desire to control and/or make money from us. Deep, because of the desire to drill down into who we are at our most fundamental levels—our interior milieu—as revealed in our thoughts and feelings. The SEAMs cycle is engaged to gain as much “relevant” information as possible about us, and then influence or even manipulate our autonomous/agential selves.[164]

Even our somatic self, such as facial features, fingerprints, DNA, voice, and gait, is considered fair game, for the identifying characteristics that can reveal, or betray, us.[165] To date, the predominant use cases of physiological (fixed physical characteristics) and behavioral (unique movement patterns) biometrics have been limited to the security needs of authenticating and identifying particular individuals.[166] While these applications bring their own challenges, some would go further, to probe aspects of the self not voluntarily revealed in outward ways.[167]

For example, purveyors of “neuromarketing” support better understanding consumers by analyzing their personal affect, including attention, emotion, valence, and arousal.[168] Using “neurodata” gathered from measuring a person’s facial expression, eye movement, vocalizations, heart rate, sweating, and even brainwaves, neuromarketers aim to “provide deeper and more accurate insight into customers’ motivations.”[169] Such technology advances pave the way for achieving the Silicon Valley ideal of knowing what a user might want, even before she does.[170] Or, more ominously, implanting that very wanting.

As noted above, a human life is much richer and more complex than the narrow and deep commodification of the Web. Those who engage in “computational” thinking de-emphasize many aspects of the human, such as context, culture, and history, as well as cognitive and emotional flexibility and behavioral fluidity.[171] Presumably these aspects of the “self” have meaning only to the extent that they provide insights into how humans decide and act in a marketplace or political environment. The nuance of the actual human being can become lost in the numeric haze.

If we are to be digitized and quantified, it should be on our terms.

Third, the quantified self can both capture and diminish human insights. Based on the discussion above, our “data” can be envisioned in a number of different dimensions:

Heterogeneous (varied). Data is not one, or any, thing. Instead, as commonly invoked, the word obscures the vast scope and range of its reach.

Relational (social). One’s “personal” data is intertwined with countless other human beings, from family to friends to complete strangers. Our streams are constantly crossing and blending.

Contextual (spatial). Data “bleeds” into/out of surrounding spaces. The physical environment of the collection and measurement can determine whether the data can be interpreted correctly as signal, or noise.

Situational (temporal). Data reflects the reality of a certain time and circumstance, but often no further. A person today is not the same person tomorrow.

These dimensions map well to the many selves we show to ourselves and the world: the personal, the familial, the social, the economic, the political. Importantly, people attach their own significance and meanings to these aspects. As one scholar summarizes the inherent mismatch between data purveyors and the rest of us: “Do not mistake the availability of data as permission to remain at a distance.”[172]

The HAACS paradigm endorses giving the human the means to fully translate her multi-faceted lived self into digital code. That translation could run as broadly and as deeply as the technology allows, and the human accepts, encompassing all dimensions in the flow of personal change and evolution. This means voluntarily introducing the richness of one’s lifestreams to the binary of the digital.[173]

Perceiving the online environment as potential home to one’s digital lifestreams opens up new ways to consider utilizing the technologies of quantification.[174] In breaking away from the monolith of the SEAMs feedback cycles, and accepting the increased blurring of the analog and the digital, we are more in charge of shaping our autonomous self, and enacting our agential self. Then, we can open up creativity, unlock insights, and light up pathways.

Guided by the assistance of one or more trusted intermediaries,[175] the process could focus on what enhances our own human flourishing. Meaning for example a less narrow, less transactional appreciation of digital artifacts—the words and sounds and images we create and gather and share online. Digital lifestreams can provide a more faithful mirroring of one’s constantly shifting internal and external interactions. As such (and perhaps ironically), they promise a more accurate representation of a person’s life than third parties are able to assemble with SEAMs control cycle processes, and their surreptitious surveillance and data gathering and inference engines.

Each human being should have considerable say in whether and how her unique person is presented to herself, and to the rest of the world. For some, this could mean establishing and policing semi-permeable zones of autonomy and agency around oneself. If, however, she chooses to have a digital self, she should be in charge. The resulting vibrant, rich, and ever-changing digital lifestreams can provide a backdrop against which, as analog beings, “we continuously co-construct and shape our environments and ourselves as agents.”[176]

The next section will look briefly at the prevailing extractive narratives around personal data. More organic alternatives will be proposed—including breaths of air, rather than seams of coal.

Creating New Analogies and Narratives

Viewing data with new conceptual lenses opens up novel vistas for further exploration. Digital lifestreams is but one conception of data in our technology-mediated world. As the preceding discussion suggests, we are in desperate need of better ways to conceptualize the stories and practices surrounding our data. Some stakeholders are making the attempt.[177] This section will supply a few additional thoughts.

Framing our data not as things but as flows—an experiential and ever-evolving process—presents a more open-ended and intentional way to conceive of humans. This framing also acknowledges the many ways that “my” data mixes inextricably with “your” data, and the non-personal data (NPD) of our surroundings.[178] In addition, this shift in framing helps move us away from the transactional modes of commerce, and towards the relational mode of human interaction.

Nonetheless, per the dominant theology of Silicon Valley, information about people is perceived to be a form of real property, a resource to be mined and processed and, ultimately, monetized.[179] As Srnicek puts it, “Just like oil, data are a material to be extracted, refined, and used in a variety of ways.”[180] The wording itself gives away the industrial presumption.[181] It “suggests physicality, immutability, context-independence, and intrinsic worth.”[182] Even unwanted bits amount to “data exhaust.”[183] It seems the best counterpoint that advocates can muster is to claim that users should be sharing in the monetization of that non-renewable asset.[184] Data as property however is an unfounded economic concept (supra Section IV.B).[185]

Framing personal information as petroleum product crowds out more humanistic conceptions of personal data.[186] More useful metaphors and analogies are well worth investigating. Grounding ourselves in the ecological, rather than the industrial, would be a marked improvement. Imagining data as sunlight, [187] while a far better conception than data as oil, for some could suggest yet another natural resource to be exploited. That framing also can feel a bit removed from the lived human experience.

One suggestion here is to imagine an organismic analogy for computational systems. The interfaces discussed below (“data extraction”) would be the sensory systems, while the AIs (“data analysis”) would be the nervous systems. What then would best connote the bio-flow of sustaining energy? One compelling image is provided by the respiratory system—the human breath. A constant process of converting the surrounding atmosphere into productive respirations—fueling the organism, but in a sustainable, non-rivalrous, non-extractive manner. The breath sustains many different bodily functions. From the molecules of collective air each of us shares, to individual breath momentarily borrowed, and back again—respiration, like data, mixes the personal and non-personal, the individual and communal. The image connotes an organic feedback cycle, one far different than the extractive SEAMs data cycles employed by platform companies and their partners.

Shifting the Data Governance Perspectives

Moving from the world of narratives and metaphors, next we encounter the necessity of determining ways that governments and markets alike should govern this thing we call data. As described above, the SEAMs feedback cycles embedded in today’s Web entail “users” surrendering data from online interactions, often based on one-sided terms of service, in exchange for useful services and goods.[188] Now, even third parties with whom one has no prior relationship can access and utilize one’s data.[189] Implicit in that model is the notion of data as a form of private property, governed by traditional laws of property rights.[190] The HAACS paradigm invites a fundamental reappraisal not just of data as a concept, but the follow-on presuppositions about ways we would govern that data.

This section first looks at economic framings that better encompass the largely non-fungible, potentially excludable, and inherently non-rivalrous nature of data. I propose relying on legal frameworks grounded in fiduciary doctrine, to fit the asymmetrical power relationship online, and better ground the extension of human and constitutional rights to our digital selves.[191]

If one is to utilize for data something akin to traditional economic principles, an initial question is what kind of “thing” we are talking about. Microeconomists have employed the so-called “factors of production” theory to divide goods and resources into four separate buckets: capital (like factories and forklifts), labor (services), land (natural resources and property), and entrepreneurship (ways of combining the other three factors of production).[192] Based on these traditional categories, data could be one of them, some combination of one or more, its own separate factor, or no factor at all.[193]

A prominent school of thought classifies data as a type of good. Microeconomic theorists classify a good based on answers to two questions: whether it is rivalrous (one’s consumption precludes others from also consuming it), and whether it is excludable (one can prevent others from accessing/owning it).[194] This two-by-two classification scheme yields four distinct categories: private goods, toll goods, public goods, and common pool resources.[195] Most private goods—cars, bonds, bitcoin—are defined as both rivalrous and excludable: one’s consumption eliminates their economic value, and governmental restrictions (usually laws) allow one to keep them away from others.[196]

Data presents an interesting, and likely unique case. First, at least some of what it entails is non-fungible, meaning it encapsulates something with unique value and meaning.[197] Even if every data packet or stream looks exactly the same from the perspective of a computational network, the shards of reality they purport to represent can differ, even minutely, one from another. Data then is not simply a commodity, like a unit of currency, or a barrel of oil, which tends to hold the same value in every situation.[198]

Second, like a private good, data is at least partially excludable; one theoretically can prevent others from accessing and using it. Excludability is not a fixed characteristic of a resource; it varies depending on context and technology.[199] So, data in some cases can be withheld from others.

Third, and crucially, data is also a non-rivalrous good. This means anyone can utilize it, without necessarily reducing its overall value. In fact, multiple uses of the same data—whether individual or collective—can increase its overall utility and value. So, data can gain value with every use and shared reuse.

Microeconomic theory tells us that this “mixed” set of attributes—non-fungibility, potential excludability, and non-rivalry—makes data what variably is called a toll, club, or collective good.[200] Old school examples of a collective good include cinemas, parks, satellite television, and access to copyrighted works.[201] Membership fees are common to this kind of good (hence the “club” and “toll” terminology).[202]

A further economic consideration is the presence or absence of externalities, those indicia of incomplete or missing markets. These amount to the “lost signals” about what a person might actually want in the marketplace.[203] Externalities in the data context translates into what market conditions might be good or bad for certain sharing arrangements.

If it is correct that data is largely a non-fungible and non-rivalrous resource by nature, and potentially excludable by design, there are major implications for how we govern data. We need not accept the too-easy assumptions that data is just another extractive resource, subject to the same commodification as a barrel of oil. The very nature of a non-renewable resource is its rivalrous nature. Data, for lack of a better word, is renewable, like sunshine, or air, or the radio spectrum. Moreover, where oil and other non-renewable resources are found on private lands, they are non-excludable goods. Either way, traditional economics shows that data is more like a collective good than a private good. As will be addressed below, this conclusion suggests different mechanisms for managing data.

The Management: Overseen as Commons?

If data is in fact a collective good, a follow-on question is, how is this particular good to be managed? Typically modern society employs institutions as the “rules of the game”—the particular blend of governmental and market structures to govern a good, service, or resource.[204] Here, what are the respective roles for the market, and the government, in establishing the ground rules for accessing data if treated as a collective good?

Traditional answers would either have the market managing private property (with an important assist from government in terms of laws of access and exclusion), or a public entity managing a public good.[205] A blend of institutional choices is possible as well, from the formality and coercive effect of constitutions, laws and regulations, to government co-regulation, to less formal codes of conduct, standards, and norms. In each case, tradeoffs are inevitable, between (for example) degrees of formality, coercion, accountability, and enforceability versus adaptability, flexibility, and diversity.[206]

While market mechanisms generally are the most efficient means for allocating rivalrous goods, traditional property rights could unnecessarily constrain beneficial sharing arrangements.[207] The non-rivalrous nature of data suggests it could be governed as a “commons.”[208] Importantly, a commons management strategy can be implemented in a variety of institutional forms.[209] Part of Elinor Ostrom’s genius was perceiving the commons as occupying a space between the two governance poles of government and market—what she labelled the “monocentric hierarchies.”[210] Her conception of “polycentric governance” by a like-minded community was intended to address the collective-action challenges stemming from a need to manage common pool resources.[211]

Data also can be likened to other intangibles, like ideas, which can be said to constitute part of an “intellectual infrastructure.”[212] Frischmann notes the difficulty of applying infrastructure concepts to “the fluid, continuous, and dynamic nature of cultural intellectual systems.”[213] The related concept of a “knowledge commons” would govern the management and production of intellectual and cultural resources.[214] Here, the institutional sharing of resources would occur among the members of a community.[215] A similar story may be possible for many data management arrangements.[216]

This brief analysis also reveals one key to the enormous success enjoyed by platforms in today’s data-centric economy. While multiple entities can leverage personal data about a user non-rivalrously, in a kind of multiplier effect, at the same time the user has found it difficult physically and virtually to exclude these same entities from accessing and using her data.[217] In economic terms, the platforms treat one’s data as a commons, to be enclosed within their business models, for their own gain. In so doing, these companies privatize the benefits to themselves, and socialize the costs to others, including society and individual users. Taleb has a name for this phenomenon: these entities lack “skin in the game,” which he likens to avoiding “contact with reality.”[218]

Where economics then can have some rational say, the governance direction seems to be away from private property law, and towards more relational conceptions of resource management, including the commons. Legal governance will be considered next.

The Law: Fiduciary Duties and Human Rights

As noted above, data protection regimes essentially accept as given the current Web ecosystem, and the “transactional paradigm” of reducing human beings to conduits of static data points. Once, however, we make the fundamental narrative shift from the transactional mode to the relational mode, then the governing legal structures and regulations can shift accordingly. What legal frameworks other than private property law can fit this new conception of data?